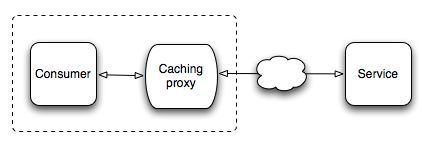

Caching proxy fronted web consumer

Consider an application which as part of its functionality queries a product search web service.

url = URI.parse('http://www.example.com')

Net::HTTP.start(url.host, url.port) do |http|

http.get('/product-search', 'q' => 'guitar')

end

Inspecting the response headers, we notice the web service instructs consumers that the results of the query will remain the same for one hour.

curl -I "http://www.example.com/product-search?q=guitar" HTTP/1.1 200 OK Content-Type: text/html Cache-Control: max-age=3600, must-revalidate Content-Length: 32650 Date: Sat, 14 Feb 2009 13:53:31 GMT Age: 0 Connection: keep-alive

At this point we can choose to ignore the cache control header and keep on querying the service for this specific resource regardless of whether the response is going to be the same. This is suboptimal for the consumer, which will suffer unnecessary latency penalties, the service, which will have to respond to inessential requests, and the network which will be subject to unnecessary bandwidth usage. Another option involves making the web consumer aware of the service's caching policies so that it only queries for data that it doesn't have or data that's become stale. This option remedies the above problems but introduces additional complexity to the consumer.

A third option involves introducing a caching proxy to the web consumer's stack responsible for mediating the service/consumer interactions solely based on the content's caching characteristics.

Benefits of this approach include: The consumer never has to deal with any caching logic; No effort is required in re-implementing cache handling code; It is likely that the caching engine will perform better than custom caching code in the consumer because it's been built and optimized for this purpose; The caching proxy can be re-used by more than one types of consumer or more than one instances of the same consumer in the stack. As a possible side-effect, the caching proxy is an additional layer to the consumer stack and this can result in network (the consumer's LAN) latency.

Here's the configuration needed in order to use Varnish as a caching web consumer proxy for the above example.

# varnish.conf

backend default {

.host = "www.example.com";

.port = "http";

}

The only thing that changes in the consumer is the address it directs its requests to.

url = URI.parse('http://service-proxy')

Net::HTTP.start(url.host, url.port) do |http|

http.get('/product-search', 'q' => 'guitar')

end